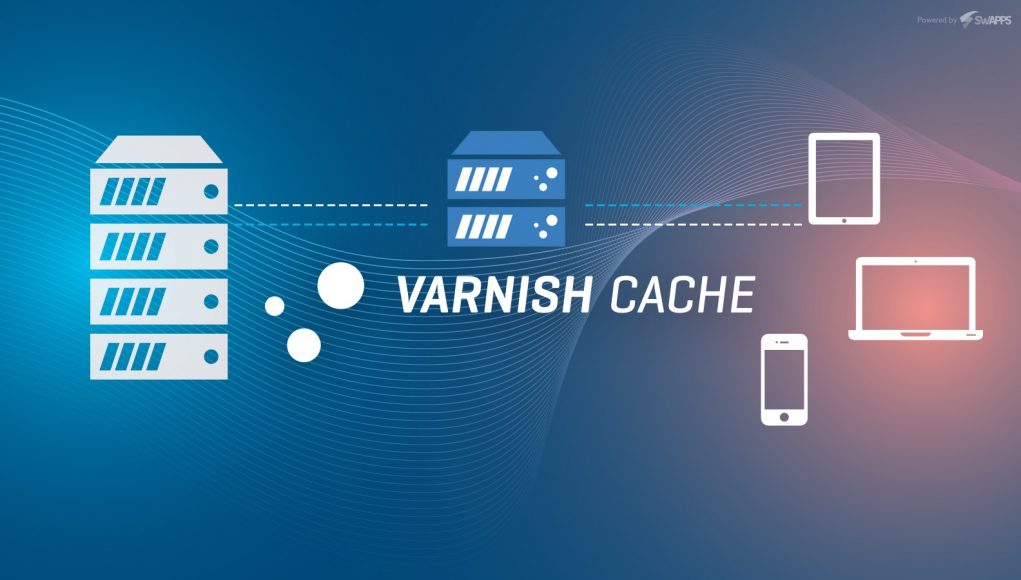

One of the best performance stacks out there is Varnish + Nginx coupled with PHP-FPM.

If we run a site using this setup, all the requests would go through Varnish.

Varnish is a great caching solution to speed up any website.

But does it need to cache static files which are already fast to deliver?

Let’s talk about best approaches to deliver static files. How can we make static files delivery fastest possible?

Don’t cache static files with Varnish. Use separate Nginx for it

In theory, Nginx outperforms Varnish when it comes to serving static files. As such, we should not serve static files via Varnish in the first place

So we have to split our configuration in two parts:

- additional Nginx on a separate IP (or VPS) solely for serving static files: static.example.com

- Varnish + Nginx + PHP-FPM solely for the time consuming PHP: www.example.com

This kind of setup requires to link static files on a separate domain, i.e. static.domain.com (where we host Nginx only) and has the following pros and cons:

- we solve cookie-free domain optimisation practice (requests to static.domain will not contain cookies)

- requires purchasing additional IP or VPS (because we can’t run Nginx #2 and Varnish on the same port)

This setup is beneficial for large scale projects. And as long as you have the money for more servers or IP, it should be considered.

Cache static files with Varnish. And do it smart, even on a budget

Now let’s assume we’re on a budget, and we can’t buy an additional IP and / or we want wait for our project to grow.

To make Varnish deliver static files nearly as fast as Nginx, we have to cache static files in Varnish.

Many people recommend against it since caching static files in Varnish would waste RAM, a resource that is quite precious on a small VPS.

Varnish static files cache appears not needed since those files are “fast” already. But as long as we can cache those, there is a performance improvement.

We can make a smart move and use multiple storage backends by partitioning Varnish cache:

- cache static files onto HDD

- cache everything else onto RAM

This way we don’t waste RAM for storing static files. The cache is split into two storages: RAM, for HTML and HDD, for static files.

To make this happen we need to update Varnish configuration. Assuming that we run Varnish 5 on ubuntu, we have to update two files.

Varnish configuration: /etc/default/varnish

This file contains storage definition, and we have to replace it and specify 2 storages for our cache: “static” and “default”.

replace From:

DAEMON_OPTS="-a :6081 \ -T localhost:6082 \ -f /etc/varnish/default.vcl \ -S /etc/varnish/secret \ -s malloc,256m"

To:

DAEMON_OPTS="-a :6081 \ -T localhost:6082 \ -f /etc/varnish/default.vcl \ -S /etc/varnish/secret \ -s default=malloc,256m \ -s static=file,/var/lib/varnish/varnish_storage.bin,1G"

So here we have a 1GB of HDD dedicated to static files cache and 256MB for the rest in RAM.

to restart varnish

service varnish restart

VCL configuration file

go to your vlc configuration file.

e.g.

sudo nano /etc/varnish/default.vcl

We have to tell Varnish which responses should go into which cache, so in our .vcl file we have to update vcl_backend_response routine, like this:

sub vcl_backend_response {

# For static content strip all backend cookies and push to static storage

if (bereq.url ~ "\.(css|js|png|gif|jp(e?)g)|swf|ico") {

unset beresp.http.cookie;

set beresp.storage_hint = "static";

set beresp.http.x-storage = "static";

} else {

set beresp.storage_hint = "default";

set beresp.http.x-storage = "default";

}

}

As simple as that: if files match static resources, we cache into static storage (which is HDD), whereas everything else (most notably html pages) is cached into RAM.

Now we can observe the two storages filling up by running sudo varnishstat. You will find SMA.default and SMF.static groups: