If you’re looking for a software to create and display forms in your website. Zigaform is an all-in-one form builder software that helps you build forms with absolutely no coding knowledge or prior design experience whatsoever. Not just typical contact forms, it helps build all kinds of form and display them wherever you want.

The software can be purchased on CodeCanyon for $37. But before you put down the money for the software, you can test form examples to have a feel of the user experience that the latest version offers.

What is Zigaform

Zigaform is a powerful PHP form builder plugin that lets you create professional forms for your site. With the free version, you can fight spam, and style forms without code. Upgrading will get you your money’s worth in tons of additional features, integrations, and functionality.

Does Zigaform Offer a Free Plan?

Yes, it does! Zigaform is the best PHP form builder for your site, and the free version is 100% free forever.

Zigaform Lite lets you build different types of forms quickly and easily using a drag-and-drop interface.

You can use Zigaform Lite to:

- Make unlimited estimation forms

- Protect forms from spam

- Get unlimited responses

- Receive entry notifications by email

- It is really easy for a beginner to use.

So… if Zigaform Lite is so great, why get the paid version? Let’s take a closer look.

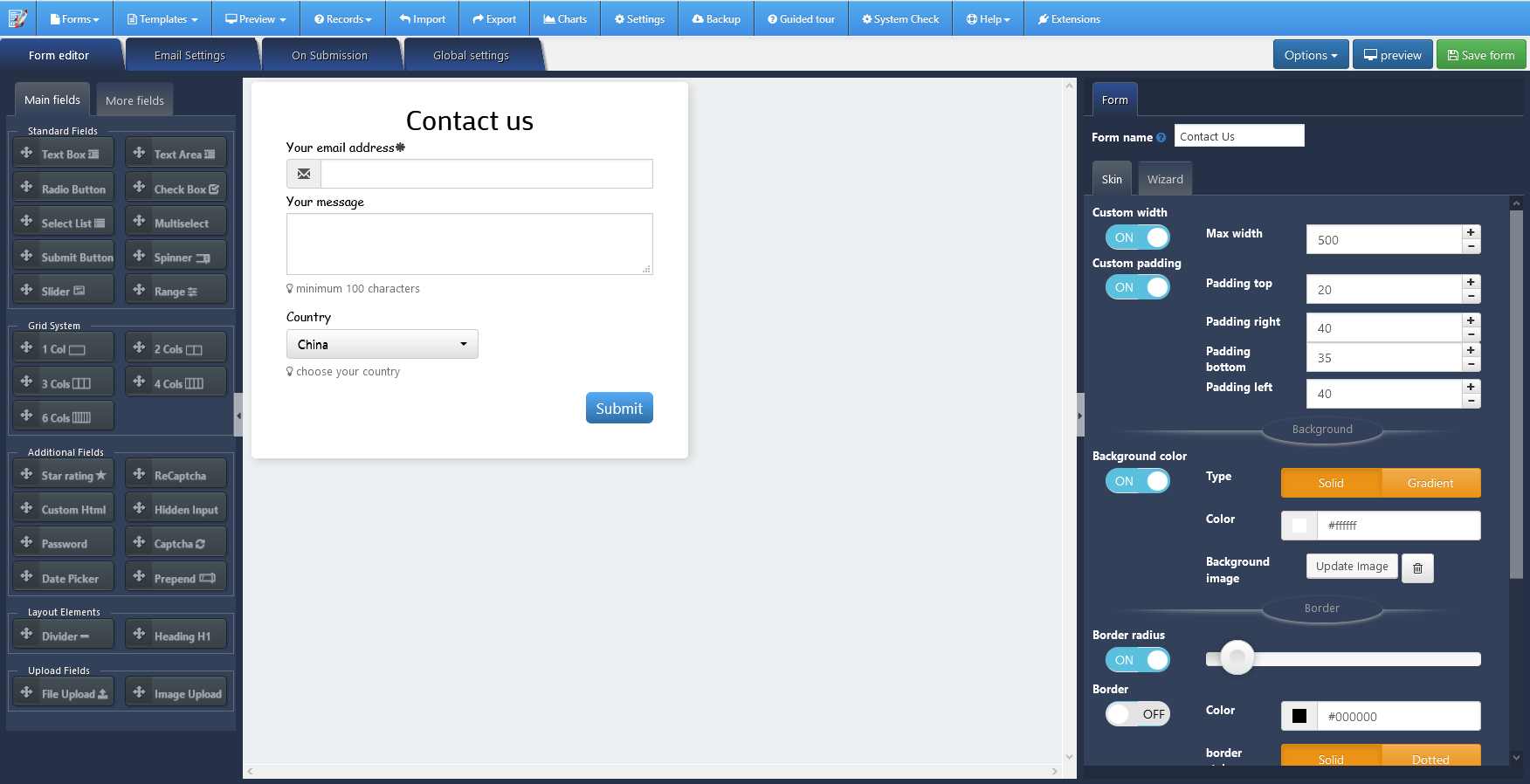

The Zigaform Form Editor

The Zigaform editing screen is where the magic happens. All of the forms are composed of different elements. There are 42 to choose from that include things like a text heading, single line input fields, datepicker and multiple choice fields.

You just click on the element you want to select to insert it onto your form.

Once the element is on the form you can click it again to edit it how you want. So for example you can change the working on the input field, say name for example, and make it a required field. Plus you can add instructions so your users don’t get confused.

With the text fields you can do things like change the font color and the size of font. It’s basically got all of the customisation options that you’d want from your form builder.

There are also general ways you can edit the appearance of the forms. You can change the width of your form and make the background color fit your branding. That sort of thing. This mix of great functionality and attractive design makes Zigaform worth it even if you just want to use it to create good looking submission forms.

Where this Zigaform review gets interesting though is when you look at the additional features.

The other thing I should mention is that you can embed your form on any page or post on your site using the widget code provide by the software. This makes it easy to insert everywhere.

Full Zigaform Feature List

Zigaform includes pretty much every feature you’ll ever need to create a form and display it on your website. There’s not much that you cannot accomplish using the plugin. It offers a varied selection of fields using which you can customize form functions precisely, and get it to appear just as you want. You’ll be able to duplicate, import, export, manage and analyze forms and data. You can set up email notification for form entries, integrate it with third-party plugins. Using the software you can create just about any kind of form, right from the usual contact form to the more complex order form and everything in between.

Here’s a quick rundown of the features:

- Real-Time Visual Editor that helps you see what you’re creating even as you build a form, and allows an instant preview

- Ability to create any type of form from the very simple to the highly complex, including popup forms

- Many ready-made form templates for different types of forms, each of which can be minutely customized

- Multi-step forms , so you don’t scare away users with one never-ending form

- 42+ Form Elements available at a click to choose from and add to your form

- Drag and drop ease to add elements and fields, and reorder them any way you like

- Choose fonts from Google fonts vast library of fonts

- Font Awesome icons to enhance your forms with professional vector icons, fonts and logos

- To resize columns, you simply have to drag the borders

- Add radio buttons or checkboxes and make them attractive with custom image upload

- Embed different media elements such as videos and maps

- Tooltips that appear on hover or click to prompt users

- Save time by duplicating previous forms and re-use them as templates

- If you wish, use custom CSS, for enhanced customization

- Conditional logic for smart forms that adapt even as users input data

- AJAX for form submission, so there’s no need to reload the page

- Control form behavior after submission

- Import or export data, entire forms

- Automatic email notification on receipt of entries

- Loads Java CSS only when required on the page, so there’s no drag on speed

Besides the plugin is fast, responsive (including multi-step) and cross-browser compatible. It supports SMTP for email, and pro add-ons. Working from the dashboard you’ll get full control over the style, position, and settings of the form. The plugin aims to be as feature-rich as possible, yet remaining easy to use.

Zigaform Add-ons & Extensions

A number of useful add-ons are available to enhance the functionality of your forms.

- reCAPTCHA: To add an extra layer of security for your forms

- Zapier: To streamline your workflow by connecting and integrating your forms with apps that you use regularly

Creating a Form With Zigaform

For the purposes of this review, I’ll be using Zigaform Premium version. While the process for creating a form is the same for both versions (lite and pro).

Quick Video tutorial:

Selecting a Form Template

First, just press Create Form button and once the editor is loaded, select a template on Template header menu.

These templates are a quick way to set up the skeleton for your form. Currently,the plugin includes many different templates:

You can always add or remove fields later, but they save you some time by eliminating the need to add basic fields. And you can, of course, always choose to start from a blank canvas rather than using one of the templates.

Because I want to choose something that applies to most readers, I’ll just go with the basic contact form template.

Using the Drag and Drop Form Builder

Once you select your template, you can use the drag and drop feature:

The drag and drop builder is one of the main features setting apart from much of the competition. Not only is it drag and drop, it’s actually a user-friendly drag and drop builder.

Some plugins tout “drag and drop” as a synonym for “simple”. Zigaform actually backs up that claim. The form builder is a breeze to use.

Then, to customize that field (or any other field), you just need to click on the field:

Rearranging existing form fields is a simple matter of dragging them to the spot where you want them to appear.

Throughout the whole process of playing around with the form builder, I never experienced any glitching or lag. Additionally, it’s easy to place fields where you want them – no need to hit the “sweet spot” like some drag and drop builders.

Overall, the Zigaform builder is a pleasant, intuitive experience. I think that beginners and advanced users alike will enjoy the interface.

Next, I’ll move into the more high-level settings you can configure for your form…

Configuring Email settings

Notifications are emails sent to you whenever someone submits one of your forms. For example, you can configure the email subject, from name, message, and more. This is a pretty standard concept for any contact form plugin.

Embedding Contact Forms

Once you’re finished creating your form, the plugin will generate a widget code for you to use anywhere you want.

Just copy the widget code to any content of your site

Documentation

Zigaform has got a rich documentation section where you can find information about how to do something with Zigaform.

url: https://kb.softdiscover.com/docs/zigaform-php-form-builder/

All topics are broadly categorized under –

- Getting Started

- Features

- Events

- Fields

- Add-ons

- Troubleshooting

Their guides are very simple and easy to understand. If you need some guidance with setting up the form, you can always rely on the documentation. If you can’t find a proper answer to your problem in the documentation section, you can always contact their support team to get personalized help.

Zigaform Reviewed

So that’s my full behind-the-scenes review of Zigaform PHP Form Builder.I’ve started switching to Zigaform for new sites I’m making. It’s well-designed, easy to use, and has all the functionality I could ever want from a plugin.